Anthropic’s Model Context Protocol: The Universal Key to Smarter AI

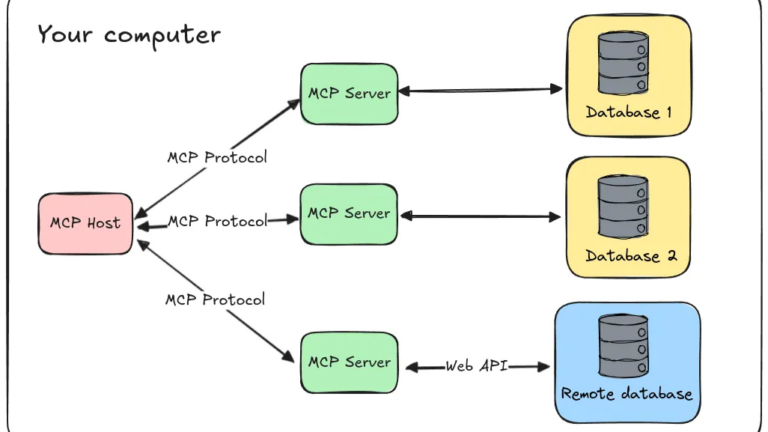

Imagine you’re juggling a dozen apps—Slack for team chats, GitHub for code, a database for analytics, and Google Drive for docs. Now picture an AI assistant that can zip through all of them, grabbing the exact file, query, or message you need, maybe even kicking off a task like sending an email. That’s not a…